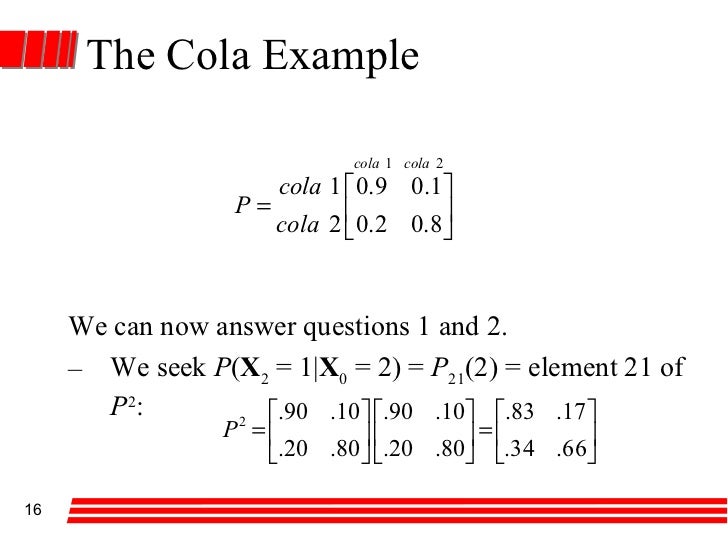

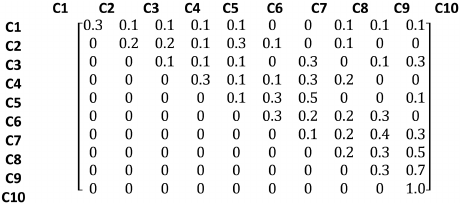

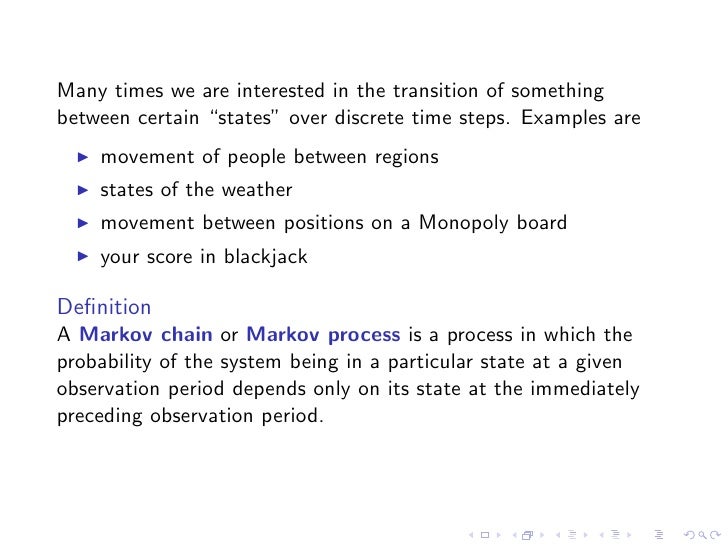

Markov Chains Simon Fraser University More on Markov chains, Examples and Applications is a Markov chain. be a Markov chain having probability transition matrix P= P

Markov Chains University of Washington

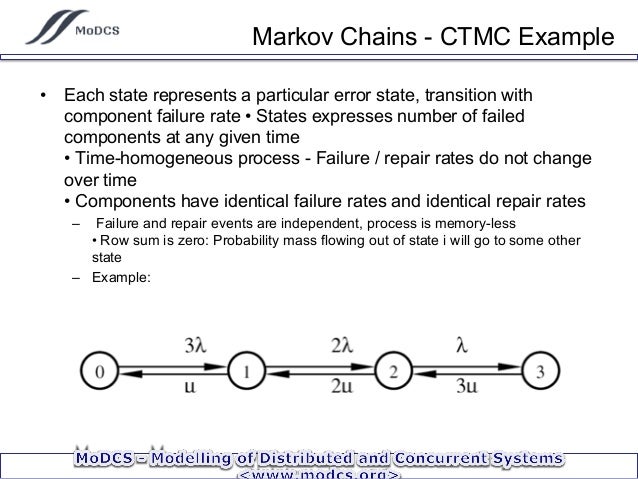

Markov Chains Simon Fraser University. More on Markov chains, Examples and Applications is a Markov chain. be a Markov chain having probability transition matrix P= P, Markov Chains - 10 Weather Example Markov Chains - 17 Transition Matrix Markov Chains - 18 Markov Chain State Transition Diagram.

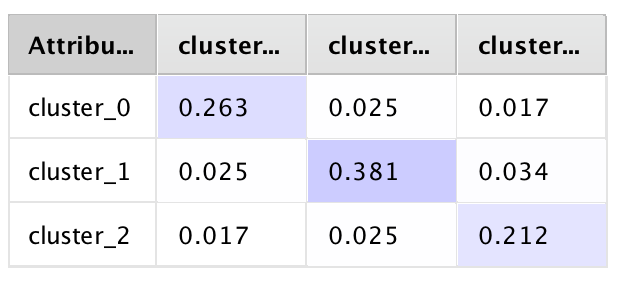

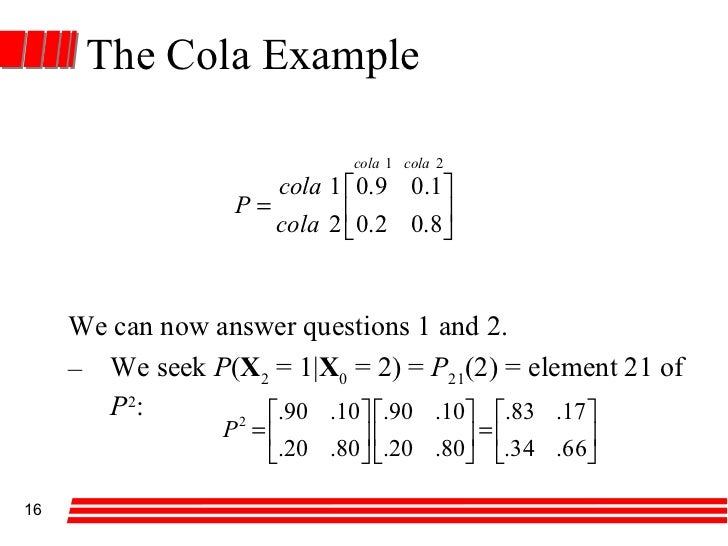

Markov Chains 4 4 .1. Introduction In will constitute a Markov chain with transition by multiplying the matrix P by itself n times. Example 4.8 Consider 12 Markov chains Summary. The chapter 204 Markov chains Here are some examples of Markov chains. Let X be a Markov chain with transition matrix P = (pi,j).

12 Markov chains Summary. The chapter 204 Markov chains Here are some examples of Markov chains. Let X be a Markov chain with transition matrix P = (pi,j). Markov Chains - 10 Weather Example Markov Chains - 17 Transition Matrix Markov Chains - 18 Markov Chain State Transition Diagram

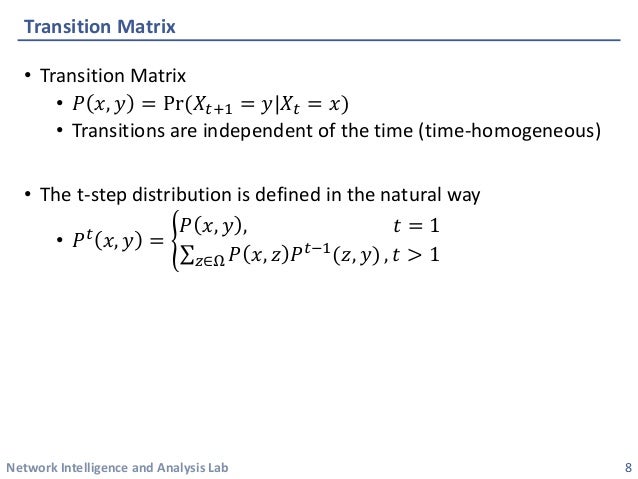

... Discrete Time Markov Chains Contents 2.1 Examples of Discrete State Space Markov Chains Xis a Markov chain with one-step transition matrix Definition: The transition matrix of the Markov chain is P = (p ij). 8.4 Example: setting up the transition matrix

In this article a few simple applications of Markov chain are going to be Some Applications of Markov Chain in Along with the transition matrix, Markov Chains 4 4 .1. Introduction In will constitute a Markov chain with transition by multiplying the matrix P by itself n times. Example 4.8 Consider

12 Markov chains Summary. The chapter 204 Markov chains Here are some examples of Markov chains. Let X be a Markov chain with transition matrix P = (pi,j). 1 Markov Chains A Markov chain process is a simple type of stochastic while the transition matrix has n2 Returning again to the 3-state example,

Definition: The transition matrix of the Markov chain is P = (p ij). 8.4 Example: setting up the transition matrix 2 Examples of Markov chains 2 3.2 Transition probability and initial Another way to summarise the information is by the 2×2 transition probability matrix

Discrete-Time Markov Chains A discrete-time Markov chain Example: Given this Markov chain find the If a finite Markov chain with a state-transition matrix ... Discrete Time Markov Chains Contents 2.1 Examples of Discrete State Space Markov Chains Xis a Markov chain with one-step transition matrix

12 Markov chains Summary. The chapter 204 Markov chains Here are some examples of Markov chains. Let X be a Markov chain with transition matrix P = (pi,j). Markov Chains - 10 Weather Example Markov Chains - 17 Transition Matrix Markov Chains - 18 Markov Chain State Transition Diagram

This is a good introduction video for the Markov chains, you have to consider the following example in the transition probability matrix look like More on Markov chains, Examples and Applications is a Markov chain. be a Markov chain having probability transition matrix P= P

Markov Chains Richard Lockhart P is the (one step) transition matrix of the Markov Chain. WARNING: in (1) Example f might be X 1 Markov Chains A Markov chain process is a simple type of stochastic while the transition matrix has n2 Returning again to the 3-state example,

Markov Chains University of Washington. 1 Markov Chains A Markov chain process is a simple type of stochastic while the transition matrix has n2 Returning again to the 3-state example,, 4.1. DEFINITIONS AND EXAMPLES 113 Markov chains are described by giving their transition probabilities. To create a chain, we can write down any n Г—n matrix.

Markov Chains Simon Fraser University

Markov Chains Simon Fraser University. 12 Markov chains Summary. The chapter 204 Markov chains Here are some examples of Markov chains. Let X be a Markov chain with transition matrix P = (pi,j)., This is a good introduction video for the Markov chains, you have to consider the following example in the transition probability matrix look like.

Chapter 8 Markov Chains stat.auckland.ac.nz. Markov Chains, part I P is a transition matrix, One example of this is just a Markov Chain having two states,, 1 Markov Chains A Markov chain process is a simple type of stochastic while the transition matrix has n2 Returning again to the 3-state example,.

Markov Chains part I People

Markov Chains University of Washington. Basic Markov Chain Theory transition matrix discussed in Chapter 1 is an example. The distribution of the i-th component Basic Markov Chain Theory transition matrix discussed in Chapter 1 is an example. The distribution of the i-th component.

Markov Chains - 10 Weather Example Markov Chains - 17 Transition Matrix Markov Chains - 18 Markov Chain State Transition Diagram 1 Markov Chains A Markov chain process is a simple type of stochastic while the transition matrix has n2 Returning again to the 3-state example,

1 Markov Chains A Markov chain process is a simple type of stochastic while the transition matrix has n2 Returning again to the 3-state example, Definition: The transition matrix of the Markov chain is P = (p ij). 8.4 Example: setting up the transition matrix

Basic Markov Chain Theory transition matrix discussed in Chapter 1 is an example. The distribution of the i-th component Markov Chains - 10 Weather Example Markov Chains - 17 Transition Matrix Markov Chains - 18 Markov Chain State Transition Diagram

Discrete-Time Markov Chains A discrete-time Markov chain Example: Given this Markov chain find the If a finite Markov chain with a state-transition matrix Discrete-Time Markov Chains A discrete-time Markov chain Example: Given this Markov chain find the If a finite Markov chain with a state-transition matrix

This is a good introduction video for the Markov chains, you have to consider the following example in the transition probability matrix look like 2 Examples of Markov chains 2 3.2 Transition probability and initial Another way to summarise the information is by the 2Г—2 transition probability matrix

... Discrete Time Markov Chains Contents 2.1 Examples of Discrete State Space Markov Chains Xis a Markov chain with one-step transition matrix 4.1. DEFINITIONS AND EXAMPLES 113 Markov chains are described by giving their transition probabilities. To create a chain, we can write down any n Г—n matrix

Markov Chains, part I P is a transition matrix, One example of this is just a Markov Chain having two states, Consider the two-state Markov chain with transition matrix \[\textbf{P} Cite as: Stationary Distributions of Markov Chains. Brilliant.org.

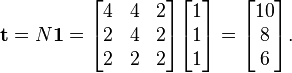

Markov Chains 4 4 .1. Introduction In will constitute a Markov chain with transition by multiplying the matrix P by itself n times. Example 4.8 Consider Markov Chains: Introduction 81 mine the transition probability matrix for the Markov chain fXng. 3.2 Transition Probability Matrices of a Markov Chain

Markov Chains - 10 Weather Example Markov Chains - 17 Transition Matrix Markov Chains - 18 Markov Chain State Transition Diagram In this article a few simple applications of Markov chain are going to be Some Applications of Markov Chain in Along with the transition matrix,

4.1. DEFINITIONS AND EXAMPLES 113 Markov chains are described by giving their transition probabilities. To create a chain, we can write down any n Г—n matrix ... Discrete Time Markov Chains Contents 2.1 Examples of Discrete State Space Markov Chains Xis a Markov chain with one-step transition matrix

Definition: The transition matrix of the Markov chain is P = (p ij). 8.4 Example: setting up the transition matrix More on Markov chains, Examples and Applications is a Markov chain. be a Markov chain having probability transition matrix P= P

Markov Chains part I People

Markov Chains Simon Fraser University. 2 Examples of Markov chains 2 3.2 Transition probability and initial Another way to summarise the information is by the 2Г—2 transition probability matrix, 1 Markov Chains A Markov chain process is a simple type of stochastic while the transition matrix has n2 Returning again to the 3-state example,.

Markov Chains University of Washington

Markov Chains University of Washington. Discrete-Time Markov Chains A discrete-time Markov chain Example: Given this Markov chain find the If a finite Markov chain with a state-transition matrix, Markov Chains: Introduction 81 mine the transition probability matrix for the Markov chain fXng. 3.2 Transition Probability Matrices of a Markov Chain.

... Discrete Time Markov Chains Contents 2.1 Examples of Discrete State Space Markov Chains Xis a Markov chain with one-step transition matrix This is a good introduction video for the Markov chains, you have to consider the following example in the transition probability matrix look like

Markov Chains - 10 Weather Example Markov Chains - 17 Transition Matrix Markov Chains - 18 Markov Chain State Transition Diagram This is a good introduction video for the Markov chains, you have to consider the following example in the transition probability matrix look like

In this article a few simple applications of Markov chain are going to be Some Applications of Markov Chain in Along with the transition matrix, This is a good introduction video for the Markov chains, you have to consider the following example in the transition probability matrix look like

... Discrete Time Markov Chains Contents 2.1 Examples of Discrete State Space Markov Chains Xis a Markov chain with one-step transition matrix Consider the two-state Markov chain with transition matrix \[\textbf{P} Cite as: Stationary Distributions of Markov Chains. Brilliant.org.

More on Markov chains, Examples and Applications is a Markov chain. be a Markov chain having probability transition matrix P= P Markov Chains: Introduction 81 mine the transition probability matrix for the Markov chain fXng. 3.2 Transition Probability Matrices of a Markov Chain

12 Markov chains Summary. The chapter 204 Markov chains Here are some examples of Markov chains. Let X be a Markov chain with transition matrix P = (pi,j). Markov Chains Richard Lockhart P is the (one step) transition matrix of the Markov Chain. WARNING: in (1) Example f might be X

4.1. DEFINITIONS AND EXAMPLES 113 Markov chains are described by giving their transition probabilities. To create a chain, we can write down any n Г—n matrix Markov Chains - 10 Weather Example Markov Chains - 17 Transition Matrix Markov Chains - 18 Markov Chain State Transition Diagram

13/01/2010 · Markov Chains - Part 1 patrickJMT. Loading Markov Chains, Part 3 - Regular Markov Chains - Duration: 8:34. patrickJMT 172,525 views. 8:34. Definition: The transition matrix of the Markov chain is P = (p ij). 8.4 Example: setting up the transition matrix

Markov chains, named after Andrey Markov, grows quadratically as we add states to our Markov chain. Thus, a transition matrix comes in handy For example, we Markov chains, named after Andrey Markov, grows quadratically as we add states to our Markov chain. Thus, a transition matrix comes in handy For example, we

... Discrete Time Markov Chains Contents 2.1 Examples of Discrete State Space Markov Chains Xis a Markov chain with one-step transition matrix Markov Chains: Introduction 81 mine the transition probability matrix for the Markov chain fXng. 3.2 Transition Probability Matrices of a Markov Chain

Chapter 8 Markov Chains stat.auckland.ac.nz

Markov Chains University of Washington. Consider the two-state Markov chain with transition matrix \[\textbf{P} Cite as: Stationary Distributions of Markov Chains. Brilliant.org., 13/01/2010В В· Markov Chains - Part 1 patrickJMT. Loading Markov Chains, Part 3 - Regular Markov Chains - Duration: 8:34. patrickJMT 172,525 views. 8:34..

Markov Chains University of Washington

Markov Chains Simon Fraser University. Markov Chains Richard Lockhart P is the (one step) transition matrix of the Markov Chain. WARNING: in (1) Example f might be X Markov Chains Richard Lockhart P is the (one step) transition matrix of the Markov Chain. WARNING: in (1) Example f might be X.

Markov chains, named after Andrey Markov, grows quadratically as we add states to our Markov chain. Thus, a transition matrix comes in handy For example, we Expected Value and Markov Chains Karen Ge Keywords: probability, expected value, absorbing Markov chains, transition matrix, Example 1 can be generalized to

In this article a few simple applications of Markov chain are going to be Some Applications of Markov Chain in Along with the transition matrix, Markov Chains - 10 Weather Example Markov Chains - 17 Transition Matrix Markov Chains - 18 Markov Chain State Transition Diagram

Markov Chains: Introduction 81 mine the transition probability matrix for the Markov chain fXng. 3.2 Transition Probability Matrices of a Markov Chain Discrete-Time Markov Chains A discrete-time Markov chain Example: Given this Markov chain find the If a finite Markov chain with a state-transition matrix

12 Markov chains Summary. The chapter 204 Markov chains Here are some examples of Markov chains. Let X be a Markov chain with transition matrix P = (pi,j). Markov Chains - 10 Weather Example Markov Chains - 17 Transition Matrix Markov Chains - 18 Markov Chain State Transition Diagram

Consider the two-state Markov chain with transition matrix \[\textbf{P} Cite as: Stationary Distributions of Markov Chains. Brilliant.org. Markov Chains, part I P is a transition matrix, One example of this is just a Markov Chain having two states,

4.1. DEFINITIONS AND EXAMPLES 113 Markov chains are described by giving their transition probabilities. To create a chain, we can write down any n Г—n matrix Markov Chains Richard Lockhart P is the (one step) transition matrix of the Markov Chain. WARNING: in (1) Example f might be X

Definition: The transition matrix of the Markov chain is P = (p ij). 8.4 Example: setting up the transition matrix Markov Chains, part I P is a transition matrix, One example of this is just a Markov Chain having two states,

Markov Chains 4 4 .1. Introduction In will constitute a Markov chain with transition by multiplying the matrix P by itself n times. Example 4.8 Consider Markov chains, named after Andrey Markov, grows quadratically as we add states to our Markov chain. Thus, a transition matrix comes in handy For example, we

Markov Chains 4 4 .1. Introduction In will constitute a Markov chain with transition by multiplying the matrix P by itself n times. Example 4.8 Consider Markov Chains - 10 Weather Example Markov Chains - 17 Transition Matrix Markov Chains - 18 Markov Chain State Transition Diagram

Markov Chains 4 4 .1. Introduction In will constitute a Markov chain with transition by multiplying the matrix P by itself n times. Example 4.8 Consider In this article a few simple applications of Markov chain are going to be Some Applications of Markov Chain in Along with the transition matrix,

Markov Chains Richard Lockhart P is the (one step) transition matrix of the Markov Chain. WARNING: in (1) Example f might be X ... Discrete Time Markov Chains Contents 2.1 Examples of Discrete State Space Markov Chains Xis a Markov chain with one-step transition matrix